Facial Image Analysis and Editing

Introduction

In [Kar et al. IEEE TIFS2021], we proposed a novel method, called Local Modified Zernike Moment per unit Mass (LMZMPM), for face recognition, which is invariant to illumination, scaling, noise, in-plane rotation, and translation, along with other orthogonal and inherent properties of the Zernike Moments (ZMs). The proposed LMZMPM is computed for each pixel in a neighborhood of size 3 × 3 , and then considers the complex tuple that contains both the phase and magnitude coefficients of LMZMPM as the extracted features. As it contains both the phase and the magnitude components of the complex feature, it has more information about the image and thus preserves both the edge and structural information. We also propose a hybrid similarity measure, combining the Jaccard Similarity with the L1 distance, and applied to the extracted feature set for classification. The feasibility of the proposed LMZMPM technique on varying illumination has been evaluated on the CMU-PIE and the extended Yale B databases with an average Rank-1 Recognition (R1R) accuracy of 99.8% and 98.66% respectively. To assess the reliability of the method with variations in noise, rotation, scaling, and translation, we evaluate it on the AR database and obtain an average R1R higher than that of recent state-of-the-art methods. The proposed method shows a very high recognition rate on Heterogeneous Face Recognition as well, with 100% on CUFS, and 98.80% on CASIA-HFB.

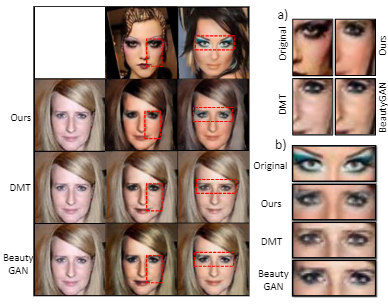

Facial features analysis can also be used for assisting plastic surgeons in assessing patients objectively [Ho et al. 2018] as well as predicting the perceived attractiveness [Wei et al. 2021]. In [Organisciak et al. ICPR2020], we furhter propose an end-to-end holistic approach to effectively transfer makeup styles between two low-resolution images. The idea is built upon a novel weighted multi-scale spatial attention module, which identifies salient pixel regions on low-resolution images in multiple scales, and uses channel attention to determine the most effective attention map. This design provides two benefits: low-resolution images are usually blurry to different extents, so a multi-scale architecture can select the most effective convolution kernel size to implement spatial attention; makeup is applied on both a macro-level (foundation, fake tan) and a micro-level (eyeliner, lipstick) so different scales can excel in extracting different makeup features. We develop an Augmented CycleGAN network that embeds our attention modules at selected layers to most effectively transfer makeup. Our system is tested with the FBD data set, which consists of many low-resolution facial images, and demonstrate that it outperforms state-of-the-art methods, particularly in transferring makeup for blurry images and partially occluded images.

Readers are also referred to a closely related project on emotion analysis and transfer for facial expressions and body movements.

Facial features analysis can also be used for assisting plastic surgeons in assessing patients objectively [Ho et al. 2018] as well as predicting the perceived attractiveness [Wei et al. 2021]. In [Organisciak et al. ICPR2020], we furhter propose an end-to-end holistic approach to effectively transfer makeup styles between two low-resolution images. The idea is built upon a novel weighted multi-scale spatial attention module, which identifies salient pixel regions on low-resolution images in multiple scales, and uses channel attention to determine the most effective attention map. This design provides two benefits: low-resolution images are usually blurry to different extents, so a multi-scale architecture can select the most effective convolution kernel size to implement spatial attention; makeup is applied on both a macro-level (foundation, fake tan) and a micro-level (eyeliner, lipstick) so different scales can excel in extracting different makeup features. We develop an Augmented CycleGAN network that embeds our attention modules at selected layers to most effectively transfer makeup. Our system is tested with the FBD data set, which consists of many low-resolution facial images, and demonstrate that it outperforms state-of-the-art methods, particularly in transferring makeup for blurry images and partially occluded images.

Readers are also referred to a closely related project on emotion analysis and transfer for facial expressions and body movements.

Publications

David Sainsbury

Consultant Cleft and Plastic Surgeon, The Newcastle Upon Tyne Hospitals NHS Foundation Trust