Physically-based Character Animation

Introduction

With the advancement of motion tracking hardware such as the Microsoft Kinect, synthesizing human-like characters with real-time captured movements becomes increasingly important. Traditional kinematics and dynamics approaches perform sub-optimally when the captured motion is noisy or even incomplete. In [Shum and Ho VRST2012], we proposed a unified framework to control physically simulated characters with live captured motion from Kinect. Our framework can synthesize any posture in a physical environment using external forces and torques computed by a PD controller. The major problem of Kinect is the incompleteness of the captured posture, with some degree of freedom (DOF) missing due to occlusions and noises. We propose to search for a best matched posture from a motion database constructed in a dimensionality reduced space, and substitute the missing DOF to the live captured data. Experimental results show that our method can synthesize realistic character movements from noisy captured motion. The proposed algorithm is computationally efficient and can be applied to a wide variety of interactive virtual reality applications such as motion-based gaming, rehabilitation and sport training.

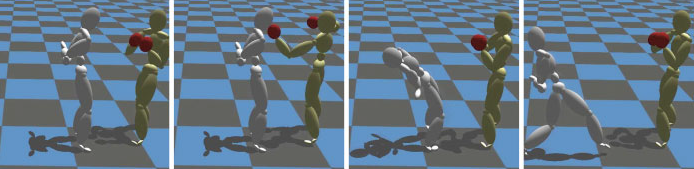

Interactive generation of reactive motions for virtual humans as they are hit, pushed and pulled are very important to many applications, such as computer games. In [Komura et al. CASA2005], we propose a new method to simulate reactive motions during arbitrary bipedal activities, such as standing, walking or running. It is based on momentum based inverse kinematics and motion blending. When generating the animation, the user first imports the primary motion to which the perturbation is to be applied to. According to the condition of the impact, the system selects a reactive motion from the database of pre-captured stepping and reactive motions. It then blends the selected motion into the primary motion using momentum-based inverse kinematics. Since the reactive motions can be edited in real-time, the criteria for motion search can be much relaxed than previous methods, and therefore, the computational cost for motion search can be reduced. Using our method, it is possible to generate reactive motions by applying external perturbations to the characters at arbitrary moment while they are performing some actions.

Readers are also referred to a closely related project on humam motion synthesis in 3D.

Interactive generation of reactive motions for virtual humans as they are hit, pushed and pulled are very important to many applications, such as computer games. In [Komura et al. CASA2005], we propose a new method to simulate reactive motions during arbitrary bipedal activities, such as standing, walking or running. It is based on momentum based inverse kinematics and motion blending. When generating the animation, the user first imports the primary motion to which the perturbation is to be applied to. According to the condition of the impact, the system selects a reactive motion from the database of pre-captured stepping and reactive motions. It then blends the selected motion into the primary motion using momentum-based inverse kinematics. Since the reactive motions can be edited in real-time, the criteria for motion search can be much relaxed than previous methods, and therefore, the computational cost for motion search can be reduced. Using our method, it is possible to generate reactive motions by applying external perturbations to the characters at arbitrary moment while they are performing some actions.

Readers are also referred to a closely related project on humam motion synthesis in 3D.

Publications

The Team